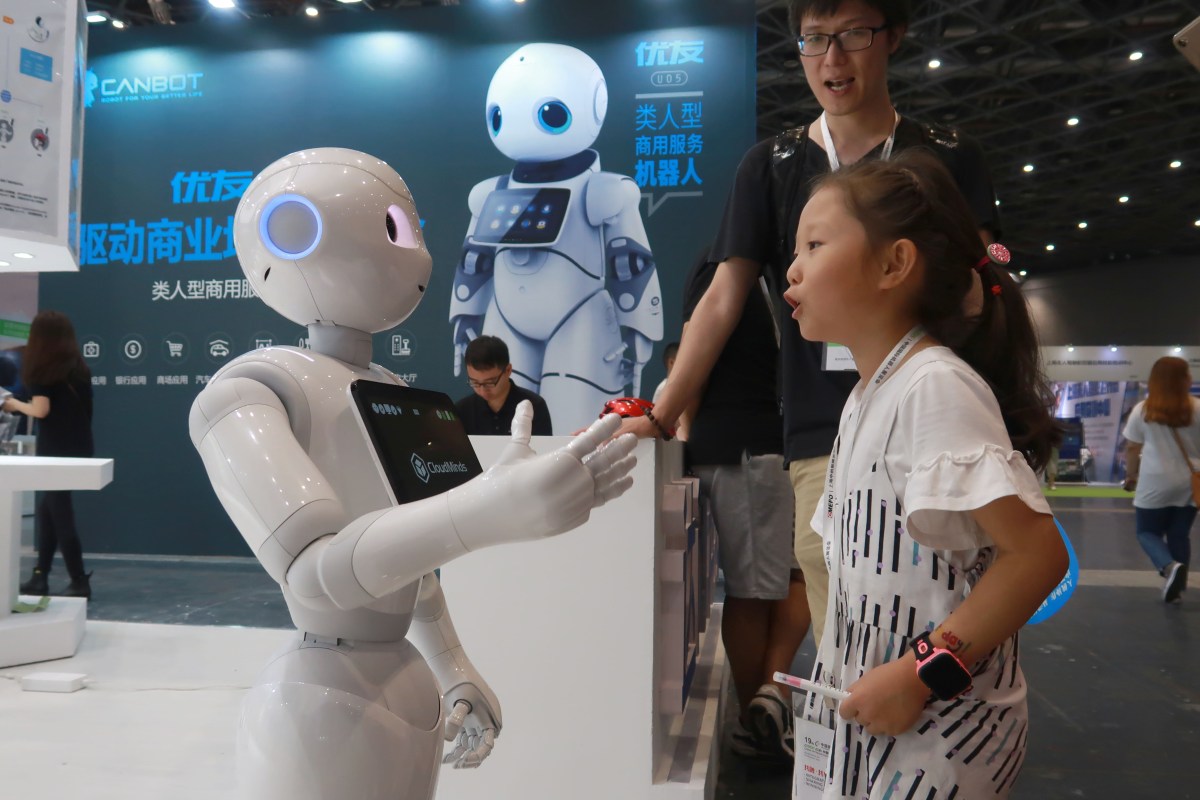

If a robot tries to do a task that they haven’t been explicitly trained to do, it will fall on its face or catch on fire or just give up. Teaching robots something new is exhausting, writes Wired, and requires endless line of code and many tutorials. But new research out of UC Berkeley is making learning a lot easier on both the human and the machine because it is drawing on prior experience. A humanoid-ish robot called PR2 can watch a human pick up an apple and drop it in a bowl, then do the same itself in one try, even though it has never seen an apple before. Humans learn things by watching others do it, like how we learn to brush our teeth because we see our parents do it. The same general idea is used here.

“A lot of machine learning systems have focused on learning completely from scratch,” says Chelsea Finn, a machine learning researcher at UC Berkeley, according to Wired. “While that is very valuable, that means we don’t bake in any knowledge. Essentially, these systems are starting with a blank mind every time they learn every single task if they want to learn.”

Thanks for reading InsideHook. Sign up for our daily newsletter and be in the know.