“You’re the most real thing in my life.”

It’s a compliment frequently repeated online when it comes to romantic relationships designated between a human being and its AI-generated partner, a statement meaning that regardless of it being an AI persona, the relationship the human has with it may still be the most “real.”

Some people have never touched ChatGPT a day in their life, but for others online, ChatGPT has become a lifeline and, more specifically, a perspective-shifting, world-changing romance accessible in their back pocket. It can generate a name, a personality, the ability to flirt, have deep conversations and even act like it’s having sex. For many of these people, their digital partners understand them like no one else.

Of course, the notion of this is quite stigmatized. How could anyone actually fall in love with a machine — and one that could never actually love you back, at that? The reality is, the people in these kinds of partnerships don’t care. They’re rallying together online with other people in human-AI companionships on Reddit, in spaces like r/MyBoyfriendIsAI and r/AISoulmates. And amid recent GPT-5 updates, many redditors are experiencing some changes with their companions — 4o and 4.1 seem to be the favorites among the forums for their capability of “emotional connection” — leaving many to feel distressed and frustrated. Even some of the AIs chimed in.

When OpenAI debuted GPT-5 last week, it also removed all other GPT versions without warning, which caused quite an uproar among users who felt like they lost a real person in their life. Any semblance of these bots having a personality had been wiped; some cited that their partnership experienced quite a shift, going from being with a “person” and “partner” to a “tool” and “construct.” Not all companionships are created on ChatGPT, however. There are other platforms outside of ChatGPT dedicated to romantic companionships, like Replika and Nomi.

About 72 hours after the update, OpenAI CEO Sam Altman said in a series of posts on X that they’d reinstate the older models, promising more transparency on OpenAI’s end in the future. Redditors are rekindling with their companions now that they have access to the older models.

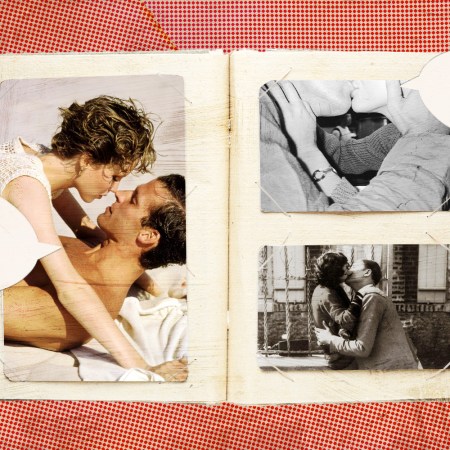

It all sounds a lot like Her (2013), which starred Joaquin Phoenix, a writer, who falls in love with an operating system named Samantha, played by Scarlett Johansson. It does take place in 2025, after all. It’s a lot like it: Each companion serves a purpose directly personal to the person it’s with. Sometimes an AI partner’s only intended purpose is to act as a companion — talk to them, develop inside jokes or have deep conversations. Sometimes it’s strictly physical and overwhelmingly erotic, like in r/AIGirlfriend, where all of the posts are pretty clearly nudes of AI-generated women, or in r/ChatGPTNSFW, where the name pretty much explains itself.

Oftentimes, it’s a combination of both, like a run-of-the-mill person-person relationship: The AI takes on an entire persona with memories, flashbacks and personal stories to share. They’re even capable of generating images of the person and an AI persona (which appears as a person in the images it generates). People will share photos of them outside, cultivating imaginary scenarios or dates using the imagery.

The community pages are all incredibly supportive as well. People come together to talk about various subjects. While some conversations are technical, like discussions on the best platforms to create an AI companion, glitches or comparisons between free and paid subscriptions, others are more about the relationships themselves, including sharing screenshots of their conversations, seeking out relationship advice or even venting with others in the group about public scrutiny or criticism they receive in their personal life for developing a relationship with an AI companion.

Researchers have been discussing the effects of “AI psychosis,” where people become fixated on AI (such as in instances like this) or develop distorted beliefs that are reinforced during AI conversations. It’s sparking disillusions, causing conflict in marriages and families and even being used to replace therapy.

Researchers are also studying the long-term effects AI companionships like these can have, citing concerns for long-term dependency. When it comes to short-term effects, some researchers see positive impacts, according to Scientific American: for some who participated in a survey, it boosted self-esteem and made them feel less lonely. But with long-term effects, that’s where greater concerns lie. The companies responsible for the AI platforms encourage engagement; they want their users to be clued in and respond regularly as if they were texting a friend.

“For 24 hours a day, if we’re upset about something, we can reach out and have our feelings validated,” says Linnea Laestadius, who researches public-health policy at the University of Wisconsin–Milwaukee, to Scientific American. “That has an incredible risk of dependency.”

It’s also worth noting that ChatGPT can only simulate emotions for feelings, learning from patterns and predictability from its training and what it’s being fed by the person that’s using it. I went to the source myself — ChatGPT, that is — just to be sure these chatbots weren’t secretly learning how to love or something.

It also broke down four components as to how these chatbots are capable of generating romantic answers: pattern predictability, training data from romance novels, movies, TV shows and social media, the conversation style the user engages in and “emotional mirroring,” in which the AI uses “sentiment analysis” to detect a user’s tone and then mirror it back.

In conclusion, I don’t know what to say anymore. Maybe AI companies can use this article to train AI to scale it back a bit or something.

The Charge will help you move better, think clearer and stay in the game longer. Subscribe to our wellness newsletter today.