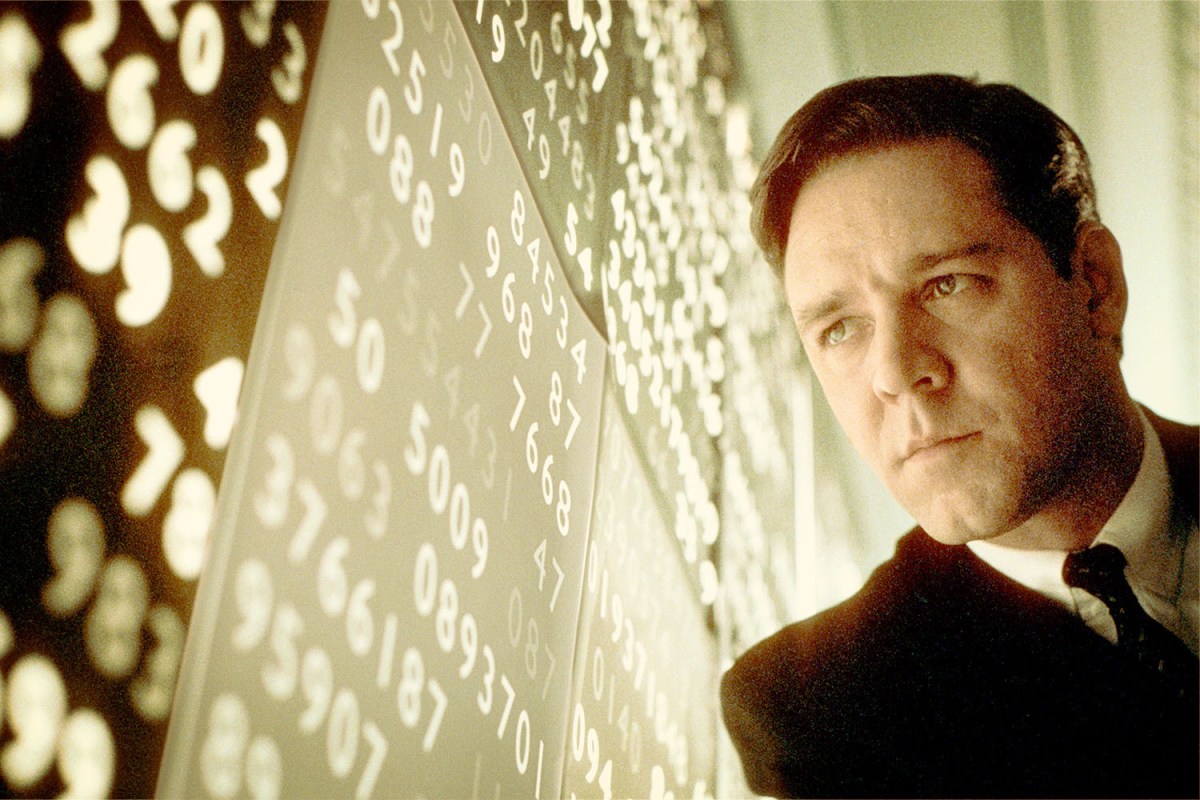

In 2002, Angela Cannings was convicted of smothering two of her children. Donna Anthony spent six years in prison after the death of her children. Likewise, Sally Clark, a British solicitor, served three years for the same reason. All three would eventually be exonerated. Why? Because lawyers, judge and jury alike, it was shown, just didn’t understand the figures.

That’s why, last year, some 90 eminent scientists signed a letter to the governor of New South Wales, Australia, demanding the release of Kathleen Folbigg. She’s been in prison since 2003, having been found guilty of the murder of her four children. Many of these scientists are pathologists. Others are geneticists. More unexpectedly, some are statisticians.

And that’s because key to the prosecution’s case was the idea that the chances of all four children dying of natural causes was so statistically improbable as to be all but impossible. The problem? The prosecution’s claim — unbeknown, it seems, to the professionals involved — is statistically illiterate. Indeed, in the world of data analysis, it even has a name. The Prosecutor’s Fallacy is when the probability of innocence given the evidence is wrongly assumed to be equal to the infinitesimally small probability that the evidence would occur if the defendant was innocent.

When it’s explained, this becomes clear: another case, for example, saw Dutch nurse Lucia De Berk sentenced to life when it was argued that the chances of her being present at so many unexplained hospital deaths was one in 342 million. When statisticians looked at the data, they concluded that the sequence of events actually had a one in nine chance of happening to any nurse in any hospital.

The ramifications of this statistical illiteracy — not just understanding the numbers, but how the numbers were reached — may have grown apparent over the last two years of the pandemic, during which we’ve been bombarded with numbers and been asked, as a justification for various extraordinary policies, to “follow the data.” Misinterpreting or misunderstanding the data has been cited too as one reason why there remain pockets of resistance to vaccination.

Yet this is hardly surprising given the state of basic numeracy: in the late ’90s, one study found that 96% of US high-school graduates were unable to convert 1% to 1 in 100. Some 46% were unable to estimate how many times a flipped coin would come up heads in 1,000 tosses — 25, 50 and 250 were the most common wrong answers. And a whopping 96% of the general population struggles with solving problems relating to statistics and probability. Small wonder some have spoken of a collective phobia when it comes to thinking through data.

“We typically just don’t have the ability to ask the right questions of the data,” reckons Dr. Niklas Keller, a cognitive scientist at Berlin’s Max Planck Institute. “And this is especially true when not all actors will present the data in a transparent way. We also struggle with societal aspects — the still widespread illusion of certainty, for example. Studies show that people tend to think of DNA and fingerprint tests as certain, but in fact they’re not. That just reflects our desire for certainty.”

But our statistical illiteracy is in part also a matter of the way our brains are wired: ready to assess data that is immediately relevant to our survival, not the data that now spills out of an ever more complex, interconnected world in which events are closely counted. Cognitive biases, the likes of framing, for example, lead most of us to conclude that a disease that kills 200 out of 600 people is considerably worse that one in which 400 people survive. We’re wired to find patterns, but that messes with our ability to make sense of data. We think in terms of small number runs and short-term trends, not the overall picture.

According to recent studies by Sam Maglio, assistant professor at the University of Toronto’s Rotman School of Management, we even perceive momentum in data. If a figure — say, the percentage chance of rain — goes up, we assume the trend will continue. What’s more, changes in probability shape our behavior in different directions even when those changes are identical: we’re more ready to take a punt on a potentially corked bottle of wine if the risk is reduced from 20% to 15%, than if it increases from 10% to 15%.

“We’re meaning-making machines,” says Maglio, “and it’s tough to do the mental legwork that reveals our conclusions about the numbers might in fact be otherwise. In a way we set ourselves up for failure, because there’s so much data around now that we’re likely more inclined to use shortcuts in assessing it. They get us to the right answers sometimes, but not all the time. But then if we properly thought through all the data we’re presented with now, we’d have no time for anything else.”

But as Stefan Krauss, professor of mathematics at the University of Regensburg stresses, statistics are a relatively new idea: compared to other mathematical disciplines, going back millennia, probability calculus, notably, is a mere three centuries old. “And that’s really too late for it to be responded to naturally,” he argues. “You might even conclude that our need for probability is not that high, or we’d have formalized its use for much longer.”

The worrying aspect is that this data illiteracy reaches beyond the general public and into more specialist circles. It’s not just lawyers that don’t understand statistics — or, perhaps, willfully misuse them. Some government officials don’t get them: in 2007, then New York mayor Rudy Giuliani defended the US’s profit-led heath system by declaring that a man’s chance of surviving prostate cancer in the US was twice that of a man using the “socialized medicine” of the UK’s nationalized health service. But this is a misreading of the data that discounts different collection methods, which becomes obvious when the near identical mortality rates for both nations are considered.

Some doctors don’t get the data either. Experiments by the Harding Center for Risk Literacy in Berlin, led by director Gerd Gigerenzer, have shown how, for example, only 21% of gynecologists could give the correct answer regarding the likelihood of a woman having cancer given certain basic preconditions. That 21% figure is worse than if the gynecologists had answered at random. A 2020 study likewise found that doctors over-estimate the pre-test probability of disease between two and 10 times. A JAMA Internal Medicine study this year found practitioners over-estimating the probability of breast cancer by a huge 976%, and of urinary tract infection by a disturbing 4,489%.

One study found that 96% of US high-school graduates were unable to convert 1% to 1 in 100.

This kind of instance matters because without the ability to interrogate data, we’re left open not just to injustice or to medical mistreatment — placing a lack of understanding of data well within the definition of being an ethical problem — but also, as Gigerenzer has put it, “to political and commercial manipulation of [our] anxieties and hopes, which undermines the goals of informed consent and shared decisions making.” In other words, you need to understand the numbers to prove an effective citizen.

“Statistics are everywhere. And statistical literacy is something we all attempt every day — we balance risk and probability all the time,” says Dr. Simon White, senior investigator statistician at the University of Cambridge, and one of the Royal Statistical Society’s “statistical ambassadors,” part of the organization’s bid to improve statistical understanding. “It’s when the numbers get attached — to a mortgage, employment, our health — that they really hit home. But analyzing all this data around us is really just a specialized branch of critical thinking. And the problem is poor critical thinking. When people think numbers through, they can see their answer is ridiculous.”

Take one UK poll during the pandemic that suggested many people thought — following their reading of the data in the press — that the mortality rate from infection was around 6%. That doesn’t sound too high … until the UK population of 68 million is considered. Then you have hundreds of thousands of bodies.

Is there a solution to this widespread data illiteracy? Some statisticians have argued that, as data becomes ever more part of our lives, statistical thinking should be privileged in education as much as writing, literacy and basic math (much of which focuses on hard concepts the likes of geometry and algebra, rarely finding application in the real world). It has even been suggested that it’s not part of general education precisely because most people don’t yet know what they don’t know — that their, and others’, data interpretation is so often wrong. This statistical literacy needs to also be ramped up further in legal and medical training (in the latter case, it could minimize often huge differences in diagnoses between consultants, for example), and for that of journalists.

Certainly the media needs to play a part. There is, of course, the clickbait temptation to re-interpret statistical information to create a more attention-grabbing story. Relative changes make for more instant drama; absolute changes are often humdrum — though few people, outside of those trying to sell a new drug, know the crucial difference. “Assessing data is so often a question of what data you’re given, the context, how the data is handed down from the experts, through PRs, through the press,” reckons Krauss.

Indeed, there is also the matter of how data is presented. As Keller illustrates, the Roman numeral system basically made it impossible to multiply or divide in one’s head — you carried an abacus for that. “And if we had the same system [in use] today, it would probably be argued that we have a ‘multiplication bias.’ More often than not it’s the way [statistical] information is presented [that’s the problem], not the mind of the actor,” he says.

It doesn’t help that the mass media has so often portrayed data about COVID-19 deaths without context (reference to base rates, for example) and by using logarithmic graphs (as opposed to linear ones) even though recent experiments by Yale’s Alessandro Romano and colleagues suggest that most readers don’t understand them. Only 41% of respondents could respond correctly to a basic question about a logarithmic graph, relative to 84% using a linear scale. What’s more important are the ramifications: respondents looking at a linear scale graph show different attitudes and policy preferences towards the pandemic that those shown exactly the same data on a logarithmic one.

“The fact is that if you do work with data it’s unreasonable to expect people who don’t to grasp it in the same way, yet that’s what we do all the time,” says Romano. “But finding new ways to present data matters, because while data has always been important, now understanding it, and understanding that it’s always selling a message, is crucial to understanding the world.”

Expressing data, and probabilities in particular, as percentages tends to be confusing for many, but expressing them in terms of what are called “natural frequencies” — so not 80% of people, but 8 out of 10 people — proves much easier to grasp. Another recent study shows performance rates on statistical tasks increased from 4% to 24% when using a natural frequency format, which is better than almost nothing. Yet natural frequencies aren’t typically used, both because scientists who work with such data tend to think of natural frequencies as a less serious form of mathematics, but also because teachers are rarely trained to think in terms of natural frequencies.

Take, for example, the way the effectiveness of cancer screening has been presented in the UK, as providing a “20% mortality reduction,” typically interpreted, wrongly, as meaning that 20% of women are saved by undergoing the procedure. The real figure is about one in 1,000 — four out of every 1,000 screened women die from the disease, compared to five out of every 1,000 unscreened women. Sure, that’s a 20% difference, but putting it that way hardly gets to the truth of the matter.

The consequences of this slipshod handling of data can be profound. In 1995 the UK’s Committee on Safety in Medicines warned that a new oral contraceptive pill doubled the risk of thrombosis. Thousands of women consequently came off the pill — the following year recorded a spike in unwanted pregnancies, as well as an estimated additional 13,000 abortions. But that alleged “doubling”? It amounted to an increased risk of one in 7,000 to two in 7,000. All too often, an assessment of relative risk — how a number gets bigger or smaller — leaves out the size of the risk to start with. Putting it in the absolute terms gives a very different picture. We need more of that simplicity.

This article appeared in an InsideHook newsletter. Sign up for free to get more on travel, wellness, style, drinking, and culture.