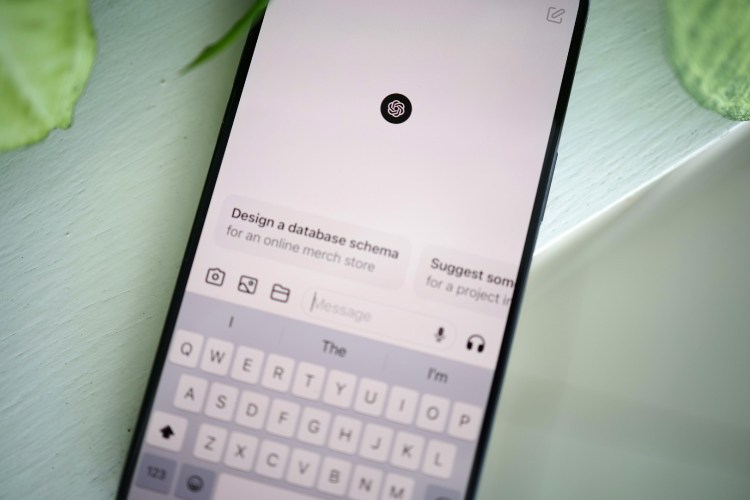

I started out my journey to get to know ChatGPT with one question: was the “artificially intelligent” chatbot ripping me off, personally? But getting the answer quickly led to a second, weirder question: Why in the world did ChatGPT think I was the author of The Tennessean’s Guide to Country Living, a book that, to the best I have been able to determine, does not exist.

There are solid reasons why the debut of ChatGPT last November took both the media and technology industries by storm. ChatGPT’s proficiency at carrying on syntactically fluent and convincingly authoritative conversations on a near infinite number of topics is a quantum leap more impressive than anything we’ve seen previously. And ChatGPT’s ability, if prompted correctly, to produce working computer code in a fraction of the time a human programmer would require has sent a jolt of new energy (and anxiety) into the age-old man vs. machine jobs debate. As a reporter who covered technology for almost 30 years, including writing a book in 1996 that spent a chapter investigating the intersection of artificial intelligence and chatbots, I have found it impossible to look away.

It’s not that I am personally worried about ChatGPT coming for my job. These days I devote myself to writing about Sichuan food, Chinese history and globalization. I just wrote an article that explored, among other things, the liberal sexual mores of ancient Sichuan as expressed through brick tiles dug up from tombs in the first and second centuries A.D. I might be wrong, but I feel my obsession is eccentric and singular enough to resist the threat of AI competition.

ChatGPT and writers: Is this how it got so smart?

My initial curiosity about ChatGPT was more selfish: I wanted to know whether I had any involvement in how ChatGPT got so smart. Specifically, were the thousands of articles that I have published on the internet part of the database that ChatGPT “trained” on? More to the point: Is ChatGPT reading my Substack? Could there be some copyright violation involved here?

ChatGPT is a creation of the startup OpenAI, which previously introduced the image generator Stable Diffusion, currently being sued for copyright violation because, in order to make its own art, it first trains itself on art works made by real, human artists. OpenAI’s technology is also at the heart of Microsoft’s CoPilot, the “AI assistant” programming tool that is being sued for copyright violation, because it sharpened its chops on millions of lines of open-source code written by real human programmers. As far as I know, ChatGPT hasn’t yet been sued for screwing writers, but that’s probably just a matter of time.

I decided to be direct. I asked ChatGPT point blank if it had trained on my work. The answer was straightforward: it didn’t know, but it was definitely possible.

As a language model, I was trained on a large and diverse dataset that includes a wide range of text sources, including news articles, books, and websites. The exact content of the dataset I was trained on is not known to me, as it was compiled by OpenAI and is constantly updated and expanded.

However, it is possible that the dataset includes articles written by Andrew Leonard, who is a technology reporter and writer. If you have a specific question or topic related to Andrew Leonard’s work, I can try my best to provide you with an answer based on my knowledge and training.

I could not resist. Next question: “What can you tell me about the technology reporter Andrew Leonard?”

I’m Dating an AI Chatbot. My Girlfriend Is Jealous.

AI dating is a new step in the endless search for digital companionship. I figured my human girlfriend wouldn’t mind if I gave it a shot.The first three paragraphs of the resulting answer provided generally accurate details about my career, clearly cribbed from my Wikipedia page, various online bios, and some critical appraisals of my work. Then came the following sentence:

“He has also published two books: ‘Bots: The Origin of the New Species’ and ‘The Tennessean’s Guide to Country Living.’”

It is true, I am the author of Bots: The Origin of New Species. However, as far as Google or Amazon is concerned there is no such book as The Tennessean’s Guide to Country Living. When I pointed this out to ChatGPT, it apologized and admitted the error, while still claiming that it “strived for accuracy.”

So yeah, that was odd. Not only am I now reasonably sure ChatGPT was trained on stuff I wrote, but I am also absolutely positive that ChatGPT gets shit totally wrong while making stuff up out of thin air. This makes me feel like a bad teacher. And it gives me a sinking sensation about what all this could mean for the future.

An open, shared corpus of human knowledge

ChatGPT told me it doesn’t know for sure if it has read my articles, but I think there’s a slam dunk case. In a paper detailing how ChatGPT was built, the authors note that “the training set” includes information storehouses such as Wikipedia and online forums like Reddit, as well as something called the “Common Crawl dataset.”

Common Crawl is a non-profit founded by a former Google executive that regularly collects vast amounts of content published on the internet and stuffs it into a single database available to anyone who wants to play with it. There’s a lot in there: Common Crawl claims that it has been collecting “petabytes” of data since 2008. One petabyte equals a million gigabytes. We’re talking trillions of words. On its website, Common Crawl makes available a list of the web domains it samples. Substack.com is in there, along with hundreds of other extremely recognizable names.

Whether or not this adds up to copyright infringement is going to be a messy question to litigate. ChatGPT doesn’t copy entire articles or even whole sentences and regurgitate them when asked questions. Roughly speaking, “large language models” like ChatGPT absorb all those trillions of words and “learn” the statistical likelihood that one word will follow another, pretty much the way the autocomplete function on your phone works. If you ask ChatGPT to generate an article about, say, Sichuan food, the story that you get back will include boring, generic sentences about spicy mapo tofu and tingly Sichuan peppercorns because the vast majority of stories about Sichuan food previously published on the Internet include boring sentences about the most famous Sichuan dish and ingredient. ChatGPT does not understand, in any meaningful way, what is true or false, it just knows what is statistically likely to be true given the dataset it trains on.

The gaping flaw here should be obvious. People are wrong on the Internet all the time, so anything that trains on Internet databases is going to be wrong, too, at least some of the time. The world is a pretty crazy place — no wonder the AIs are hallucinating non-books into existence!

In an interview with MIT Technology Review in 2013, Common Crawl’s founder, Gilad Elbaz, said the service his non-profit provides was important because “having an open, shared corpus of human knowledge is simply a way of democratizing access to information that’s fundamental to innovation.”

In theory, I support this assertion. I have actually never been a writer who cared much about my own copyright. I’d far rather my stories be freely used to build out an “open, shared corpus of human knowledge” than locked behind paywalls and protected by rabid lawyers. But it does concern me that my work may end up training the next version of ChatGPT to pontificate on ancient Sichuan in a way that ends up generating some kind of mangled Tennessean Guide to Country Living-style monstrosity. If I get something wrong, which I assuredly have done and will continue to do, I want somebody to be able to point the finger at me and say: he’s the idiot!

Because what I do care about is the accurate, and appropriately attributed, construction of knowledge. If ChatGPT trains itself on my articles it will be absorbing the research efforts of all the historians and archaeologists and journalists and food writers that my work relies on. But when it reassembles that that work in response to a query, not only is it going to get some of it wrong, but those of us asking it questions cannot possibly evaluate the veracity of its answers, because they are based on statistical analyses of massive databases of text that are impossible for humans to decipher. The “innovation” inflicted upon us by ChatGPT obscures our connection to the humans that produced the original knowledge. This disrespects, complicates and hinders our effort to understand the world.

This is a step backward.

This article appeared in an InsideHook newsletter. Sign up for free to get more on travel, wellness, style, drinking, and culture.