Humans have competed against machine intelligences since before AI was a highly-touted buzzword. Human chess players have competed against software for decades now — with computerized opponents establishing a long string of dominance over their organic counterparts. The latest front in competition between man and machine took place in a different arena — and its outcome has plenty to say about where AI might be going.

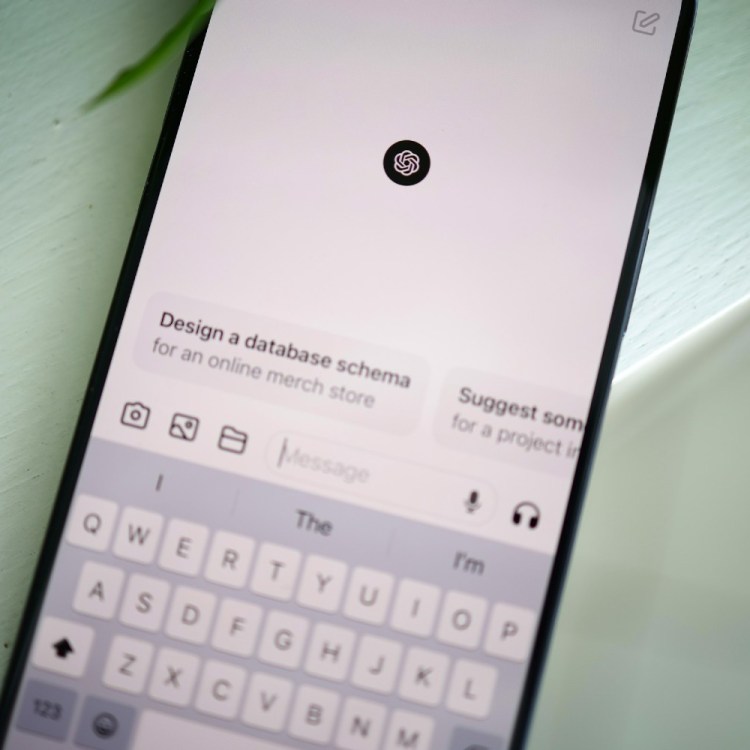

Writing at Live Science, Lyndie Chiou discussed the nature and terms of the competition, which took place in Berkeley earlier this year. The competition involved OpenAI’s o4-mini model, for which mathematician Elliot Glazer assembled a list of math problems of varying difficulties for it to solve. Working with Epoch AI, Glazer offered mathematicians an incentive to come up with problems o4-mini could not solve: $7,500 per question.

The latest iteration of this challenge took place over a weekend in May, and saw 30 mathematicians gather in California where they split up into teams of six. Turns out that this latest AI model did far better at answering questions than its predecessors, with Chiou describing the chatbot as having “unexpected mathematical prowess.”

Pandemic Learning Loss Is Affecting College Students’ Math Skills

Student and faculty alike are concernedIn the end, the assembled mathematicians were able to come up with 10 problems that the chatbot was not able to solve. What the results of this competition could mean is still up for debate, though. “If you say something with enough authority, people just get scared,” mathematician Yang-Hui He told Live Science. “I think o4-mini has mastered proof by intimidation; it says everything with so much confidence.”

This article appeared in an InsideHook newsletter. Sign up for free to get more on travel, wellness, style, drinking, and culture.