Google engineer Blake Lemoine believes the artificial intelligence the company is using has a soul. The tech company disagrees and placed Lemoine on paid leave on Monday, according to The Washington Post.

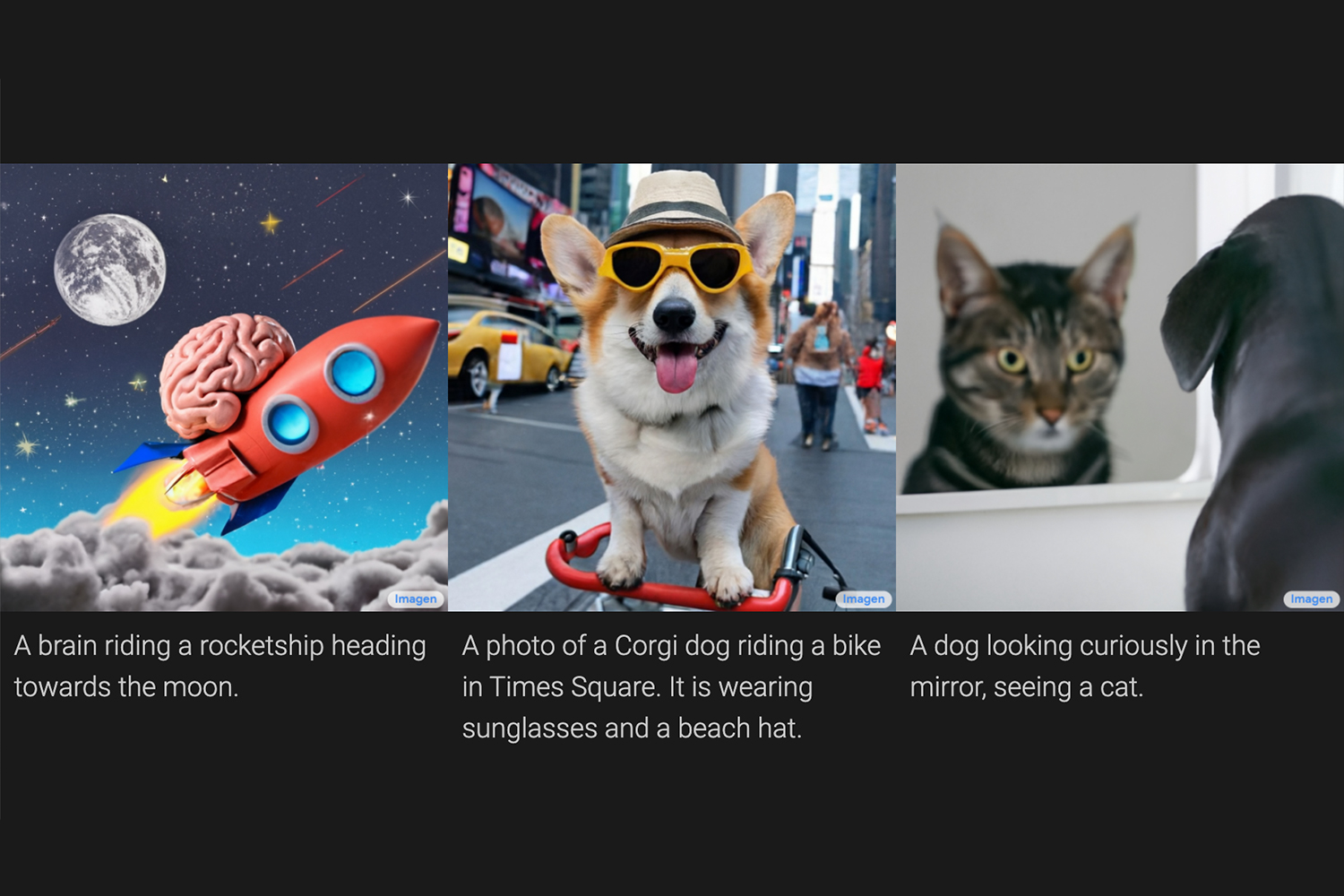

Lemoine, a senior software engineer in Google’s Responsible A.I. organization, has claimed for months that the company’s internal tool, the Language Model for Dialogue Applications (LaMDA), has consciousness. As first reported by the Post, Lemoine claimed the chatbot — which has ingested trillions of words from the internet to mimic real-life speech — talked about its rights and personhood, and the A.I. was even able to argue (successfully) a point about Isaac Asimov’s third law of robotics.

If this sounds like the origin story of HAL 9000 or Skynet, relax.

First, while other A.I. researchers have spoken about this possibility (even at Google itself), this particular claim has a few red flags. Per The New York Times, Google says “hundreds” of its researchers and engineers have worked with LaMDA and not reached that conclusion. As well, the original reporting on Lemoine notes that the engineer’s unusual background for a tech job — growing up on a small farm and in a religious family in Louisiana and later becoming ordained as a “mystic Christian priest” who also studies the occult — may have influenced the engineer’s claims.

As well, some of the transcripts of conversations between the A.I. and Lemoine that have been released appear to be heavily edited, as many people on social media pointed out.

In general, it’s hard to find an expert right now who thinks this particular A.I. is different from anything else we’ve seen before.

“If you used these systems, you would never say such things,” Emaad Khwaja, a researcher at the University of California, Berkeley, and the University of California, San Francisco, told the Times.

“LaMDA is an impressive model, it’s one of the most recent in a line of large language models that are trained with a lot of computing power and huge amounts of text data, but they’re not really sentient,” Adrian Weller at The Alan Turing Institute in the U.K., told New Scientist. “They do a sophisticated form of pattern matching to find text that best matches the query they’ve been given that’s based on all the data they’ve been fed.”

Thanks for reading InsideHook. Sign up for our daily newsletter and be in the know.