A new text-to-image generator created by Google has the potential to increase instances of fake news and harassment, although the technology is still in an early phase.

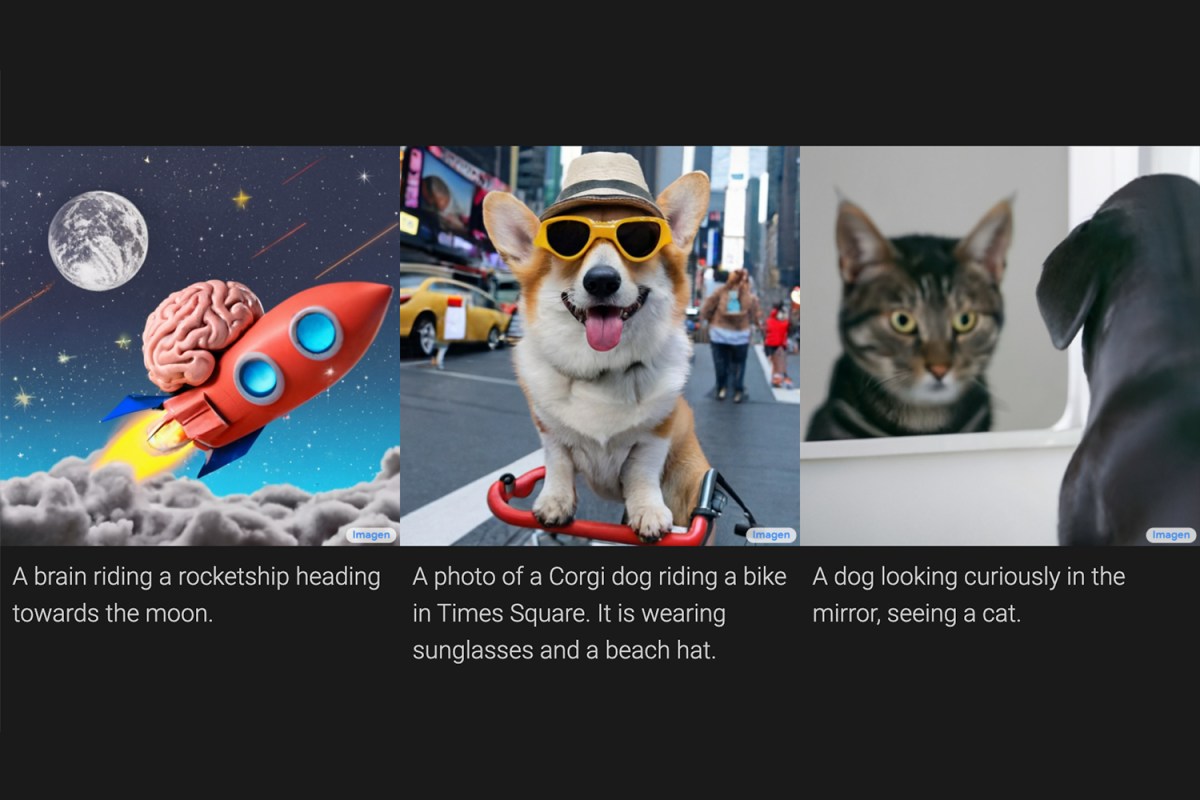

Utilizing artificial intelligence, Google’s Imagen application would allow you to type in the phrase like, say, “a blue jay standing on a large basket of rainbow macarons” (a real example they use) and get back a photorealistic depiction of your sentence.

What could go wrong?

Fortunately, even the researchers behind the project readily acknowledge the issues raised by creating a realistic image out of any thought you typed out. To counter this, Google has only showcased limited and rather ridiculous examples on its Imagen page, and it won’t release code or a public demo.

Even Google’s team doesn’t trust everything they’re lifting from the web. “The data requirements of text-to-image models have led researchers to rely heavily on large, mostly uncurated, web-scraped datasets,” they note. “While this approach has enabled rapid algorithmic advances in recent years, datasets of this nature often reflect social stereotypes, oppressive viewpoints, and derogatory, or otherwise harmful, associations to marginalized identity groups.” As well, initial tests showed Imagen “encodes a range of social and cultural biases when generating images of activities, events, and objects.”

It’s commendable that Google’s researchers readily acknowledge early flaws with their application, even as other text-to-image generators are already out there in limited use. The Verge sums up Imagen’s potential issues succinctly: “Although text-to-image models certainly have fantastic creative potential, they also have a range of troubling applications. Imagine a system that generates pretty much any image you like being used for fake news, hoaxes, or harassment, for example. As Google notes, these systems also encode social biases, and their output is often racist, sexist, or toxic in some other inventive fashion.”

As well, Google is only showcasing the best work Imagen has created so far — the technology behind text-to-image is still in its infancy and prone to misunderstandings. For now, it’s probably best that we’re only seeing goofy pics that represent “an alien octopus float[ing] through a portal reading a newspaper.”

Google Now Lets You Remove Personally Identifiable Information From Search

Here’s how to do itNonprofits Raise Alarm Over Google Maps Route Up Scottish Mountain

One route listed could be very dangerous for climbersGoogle’s Travel Planning Tools Just Got Even Better. Here’s How.

Using Google Flights, you can now track prices to find deals for any dates, and that’s just for startersThanks for reading InsideHook. Sign up for our daily newsletter and be in the know.