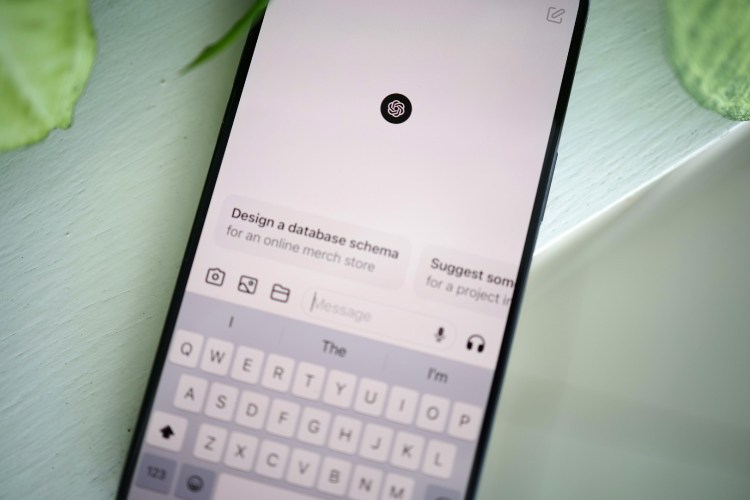

A mental health service used artificial intelligence to provide support for about 4,000 people without notifying customers — which we know because the founder announced the initiative after the fact on Twitter.

Per New Scientist, the free mental health service Koko used a chatbot powered by GPT-3, a publicly available AI built by OpenAI, to provide words of support and encouragement. Founder Rob Morris then explained what happened in a Twitter thread late last week.

“We used a ‘co-pilot’ approach, with humans supervising the AI as needed,” he wrote. “We did this on about 30,000 messages.”

The good news is that messages composed by AI (and supervised by humans) were “rated significantly higher than those written by humans on their own” and response times improved by 50%.

The bad news? Once people realized the responses were crafted by machines, their responses weren’t considered useful by users. “[The advice] sounds inauthentic,” Morris said, noting the problem might have been that AI wasn’t “expending” any effort.

In conclusion? Morris said this might be an area where humans will always have an advantage.

While using AI (or a written manual) as a guide for human responses in a chat session for, say, paying a bill doesn’t seem that controversial, applying this early stage of artificial intelligence to mental health without customer knowledge is seriously concerning — and we say this while suggesting AI definitely has a place in health care. Morris suggested that some of the controversies about this practice came about because of a poorly-worded tweet; he later clarified that he was not “pairing people up to chat with GPT-3 without their knowledge” but simply offering the AI as a tool for human peer support.

The people using Koko didn’t deserve to be part of an experiment utilizing a nascent technology without their knowledge. Thankfully, it seems like the founder of Koko has learned a valuable lesson: People who need support crave real human interaction.

The Charge will help you move better, think clearer and stay in the game longer. Subscribe to our wellness newsletter today.