We live in a time when questions of how the justice system functions and whether law enforcement methods are flawed are constantly being asked. Probation is another hotly debated subject here; in recent years, there have been a number of high-profile cases that have led to further explorations of how these processes work and whether they’re truly just.

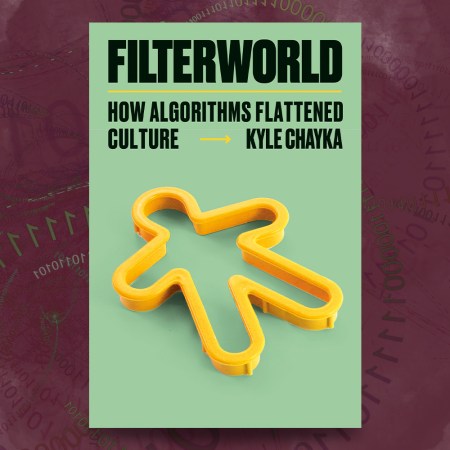

A new article in The New York Times by Cade Metz and Adam Satariano explores another controversial aspect of probation: the fact that, in some areas, algorithms play a role in determining it and in providing recommendations for how law enforcement treats people who are on probation. That’s not the only way algorithms have factored into policing and sentencing, as the article reports.

Metz and Satariano begin with a discussion of an algorithm focusing on probation in Pennsylvania, and expand from there:

The algorithm is one of many making decisions about people’s lives in the United States and Europe. Local authorities use so-called predictive algorithms to set police patrols, prison sentences and probation rules. In the Netherlands, an algorithm flagged welfare fraud risks. A British city rates which teenagers are most likely to become criminals.

If you’re reading this and wondering if this lines up with instances of bias in the justice system, you’re not the only one. An investigation from ProPublica into one such example of the technology, the risk-assessment tool COMPAS, revealed some unnerving racial disparities in the system. Another study indicated that COMPAS wasn’t particularly accurate at all.

Algorithms can seem to make life far easier for many people. “The algorithms are supposed to reduce the burden on understaffed agencies, cut government costs and — ideally — remove human bias,” write Metz and Satariano. But as their article shows, the actual technology might not live up to that ideal — and the gulf between what something is supposed to do and what it actually does can have a huge impact on many people’s lives.

Subscribe here for our free daily newsletter.

Thanks for reading InsideHook. Sign up for our daily newsletter and be in the know.