More and more devices using AI and machine learning technology are becoming available for purchase, something that’s just as true in the world of consumer products as it is in the medical industry. A 2024 article in the industry publication MedTech Dive took note of the rise in FDA approvals of medical devices using AI from 1995 to 2023. Just six products of this type were approved in 2015; by 2023, that number had risen to 221.

The question lingers: is that enough, or is more regulation needed? A paper published this month in the journal PLOS Digital Health makes an emphatic case for the latter. The authors contend that “many AI-enabled tools are entering clinical use without rigorous evaluation or meaningful public scrutiny,” and opted to explore the FDA’s existing processes for evaluating AI devices and tools for medical use.

In their summary, the paper’s authors acknowledge the good work that AI can do in a clinical setting, but also point to potential issues based on the FDA’s existing approvals. “Many tools lacked clear demonstration of clinical benefit or generalizability, and critical details such as testing procedures, validation cohorts, and bias mitigation strategies were often missing,” they wrote.

“AI tools are only as good as the data they are trained on,” the researchers wrote. “When these datasets are biased or incomplete, the resulting systems perpetuate or even amplify biases reflecting patterns of social inequalities.”

As Nature‘s Mariana Lenharo pointed out, one of the issues this paper raises is the constant evolution of AI tools due to the data on which they’re trained. It’s not hard to imagine a scenario where a device that works properly during the evaluation period ends up ingesting more data that leads to improper diagnoses of multiple patients.

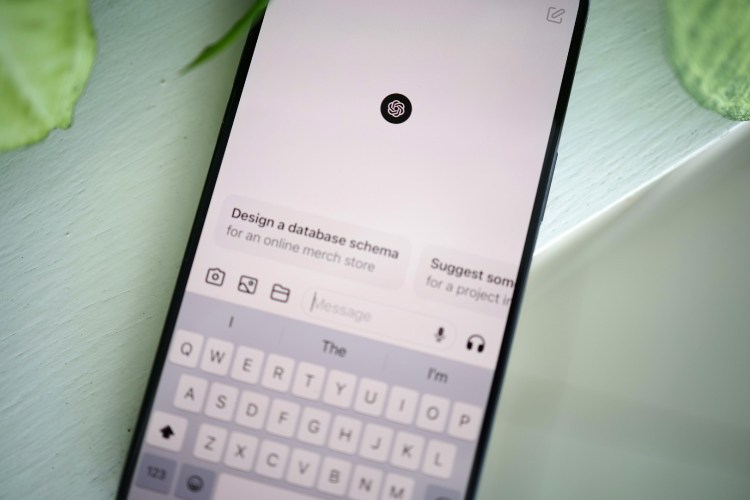

Is AI Therapy Really a Good Idea?

It’s here, so let’s examine the pros, cons and unknowns of outsourcing your counsel to an LLM“I think relying on the FDA to come up with all those safeguards is not realistic and maybe even impossible,” MIT researcher (and one of the paper’s authors) Leo Anthony Celi told Nature.

In their paper, Celi and his colleagues make the case for a larger regulatory framework — one that would “collaborate closely with universities, AI experts, and healthcare systems, leveraging their research capabilities and domain knowledge to enhance oversight.” Will it lead to change in the way these devices are evaluated and regulated? That’s less clear — but this paper may well look prescient before too long.

The Charge will help you move better, think clearer and stay in the game longer. Subscribe to our wellness newsletter today.