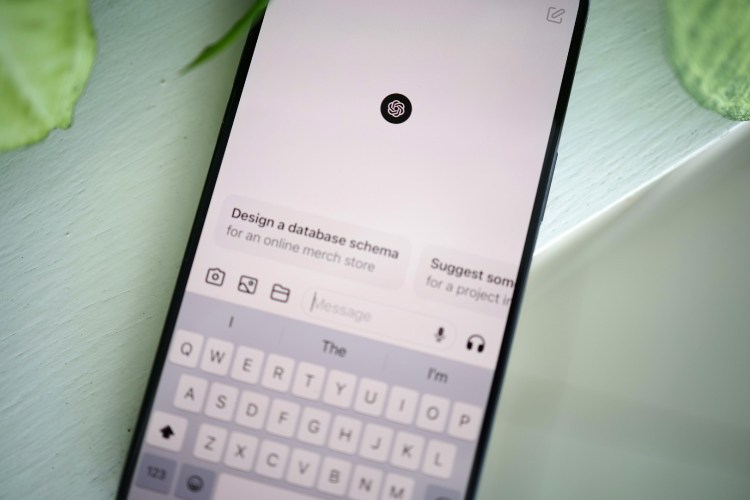

In the weeks before the public release of Bard — Google’s new AI chatbot, similar to ChatGPT — Google employees were asked to test the artificial intelligence tool. In a new report from Bloomberg, citing 18 current and former employees as well as internal documentation, workers who evaluated Bard warned Google not to release it and called the chatbot a “pathological liar.”

In other internal comments cited by Bloomberg, one Google employee said Bard was “cringe-worthy.” Others detailed how the chatbot provided dangerous advice on landing a plane and scuba diving. However, others at Google say they felt like Google’s safety checks were efficient and that the program was safer than other chatbots.

In an internal messaging group within the company, one employee wrote in February that Bard was “worse than useless” and warned the company not to launch. About 7,000 employees viewed the message, with many agreeing, according to Bloomberg. These findings are sparking questions about whether Google is compromising ethical standards while prioritizing competition with other companies.

ChatGPT and Me: A Writer Ponders How He’s Feeding the AI Beast

Is it leading to smarter machines, his own downfall or both?The Bloomberg report also includes about details about steps that were skipped in the rush to release the product, such as the AI governance lead Jen Gennai saying certain production compromises might be necessary for a quicker launch. Gennai also overruled a risk evaluation submitted by her team members one month after the internal messaging situation in February, according to Bloomberg.

The risk evaluation said Bard was not ready because it could cause harm, but in a statement provided by Gennai, she said it wasn’t solely her decision but that of senior leaders across other groups.

“We are continuing to invest in the teams that work on applying our AI Principles to our technology,” said Brian Gabriel, a spokesperson for Google, in response to questions from Bloomberg.

It’s unclear what Google’s next steps in Bard’s development look like, but until then, you can join the waitlist to access Bard — if you still want to.

Thanks for reading InsideHook. Sign up for our daily newsletter and be in the know.