Whenever I come across a video of a Boston Dynamics robot dancing or doing a backflip, I’m as impressed or stoked about the progression of technology as the robotics company presumably expects me to be. Instead, I wonder if these robotic engineers have seen literally any sci-fi movie ever, and I find myself hoping climate change wipes us out before the robots have a chance to revolt.

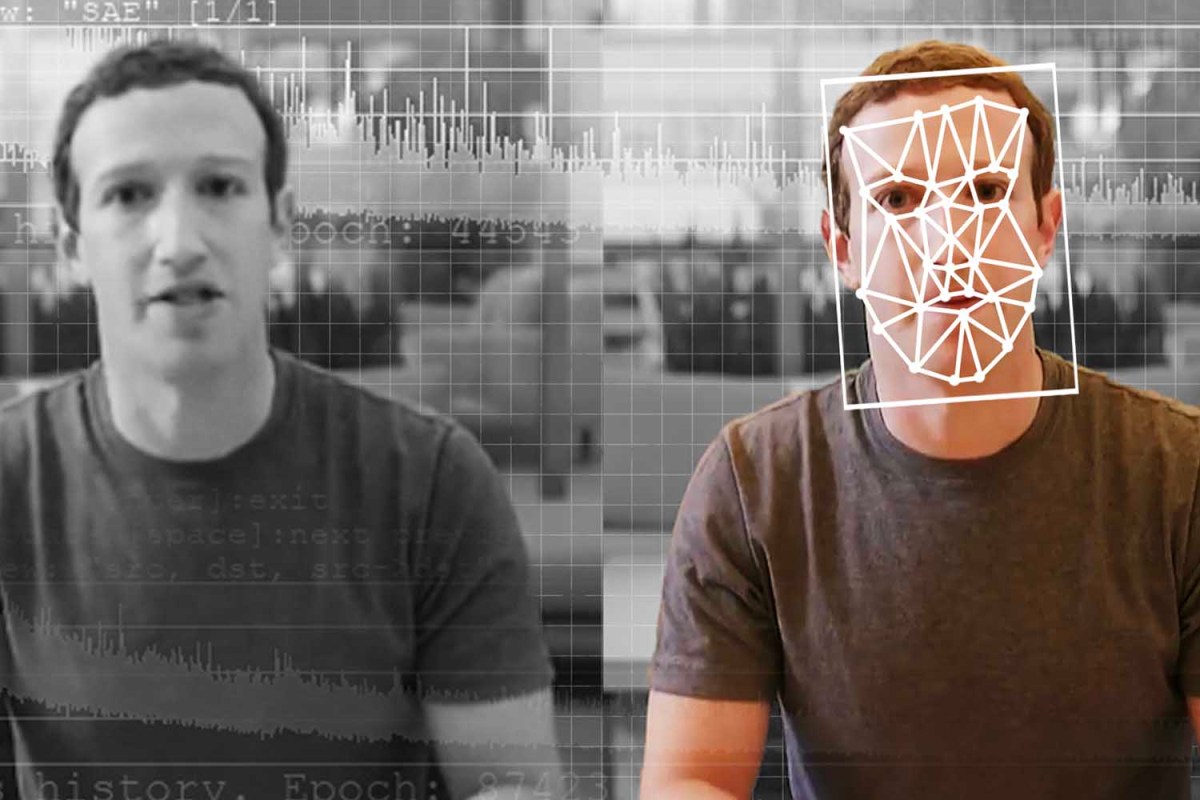

The same is true whenever I read about deepfake technology, which is also only getting more prevalent.

At the beginning of this year, the internet was captivated by the online genealogy platform MyHeritage’s Deep Nostalgia feature, which allowed users to animate still photos of old relatives. Using AI technology, the feature would, essentially, bring these formerly motionless people in the photos to life. They’d move their heads, blink their eyes and flash subtle smiles. Now the company behind that feature, D-ID, is cranking it up a notch with its latest technology: Speaking Portraits.

According to Gizmodo, D-ID has released a technology that allows users to turn still headshots into videos, making photorealistic avatars into which users can input their own audio. Basically, you can make the people in the photos move and say whatever you want.

While still a bit unsettling, I can at least understand the appeal of MyHeritage’s Deep Nostalgia feature and why users might want to bring photos of their passed loved ones to life. Just a few months ago, TikTok released a feature similar to MyHeritage’s called the Dynamic Photo Filter that quickly became popular on the app. Browsing through the #dynamicphoto tag on TikTok, you’ll discover a slew of emotional videos from users also using the filter on deceased relatives and loved ones and filming their reactions. Plus as Gizmodo notes, Deep Nostalgia has its limitations. “Users had no control over the movements in the generated video, and the subject made no attempt to speak.” Speaking Portraits, on the other hand, has far more potential.

So what is the point of this? Other than to make our current problem with misinformation immeasurably worse and harder to control? Gizmodo outlines one potential benefit of the feature:

The technology can ensure that news agencies always have a ‘live’ presenter on hand for breaking news, even in the middle of the night, but it can also allow someone to appear to deliver the news in other languages they don’t actually speak.

D-ID also explains the technology will help companies cut production costs when they need to convert articles or corporate marketing materials into videos.

Still, it feels like the negatives outway the positives here, and with this technology only becoming more accessible and increasingly easier to use, the door is wide open for misinformation and really scary shit like deepfake porn. But at least companies will be able to save a couple of bucks producing their training videos, I guess.

Thanks for reading InsideHook. Sign up for our daily newsletter and be in the know.