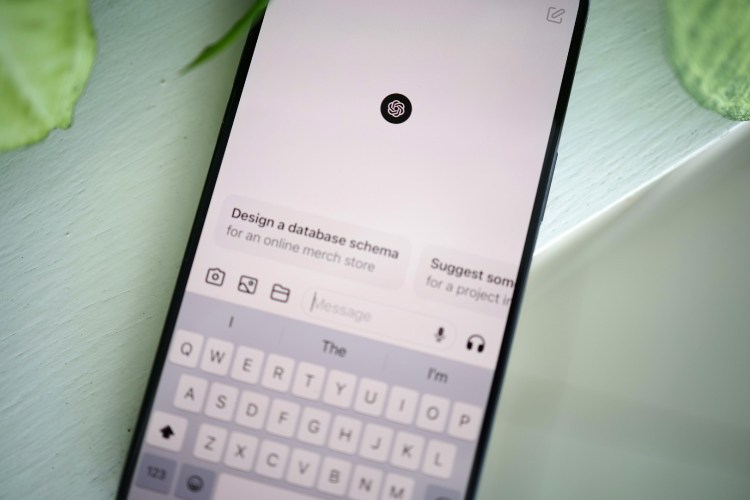

Will chatbots fully transform the world, including the ways in which countless industries operate and countless jobs are performed? That depends on who you ask. It does seem worth noting that some of the same companies that are investing heavily in chatbots and AI technology have also raised lingering questions about that technology’s veracity — especially when it comes to how their own workers interact with it.

The latest example of this comes via a Reuters report on Google’s parent company Alphabet’s advice to its own employees when it comes to working with chatbots — including its alternative to ChatGPT, Bard. Google instructed its employees to avoid entering any “confidential or sensitive information” into chatbots. As per Reuters’s reporting, that advice also extended to not directly using code provided by chatbots.

It’s good advice, but it also begs a few questions — including where the line between “helpful, useful resource” and “massive privacy red flag” exists.

This Mental Health Service Used an AI Chatbot Without Telling Its Customers

The free service Koko tested out AI-assisted advice and came to an unsurprising conclusionAlphabet’s issues with its employees entering confidential information also point to concerns about how chatbots are being trained. In recent weeks, it’s become clear that the technology underlying AI chatbots evaluated language from, among other sources, large collections of fan fiction.

Just because you’re unsure of what’s informing a chatbot doesn’t mean you haven’t contributed to its ongoing education — and if that leaves you alarmed about the effects of chatbots going forward, you’re not alone.

Thanks for reading InsideHook. Sign up for our daily newsletter and be in the know.