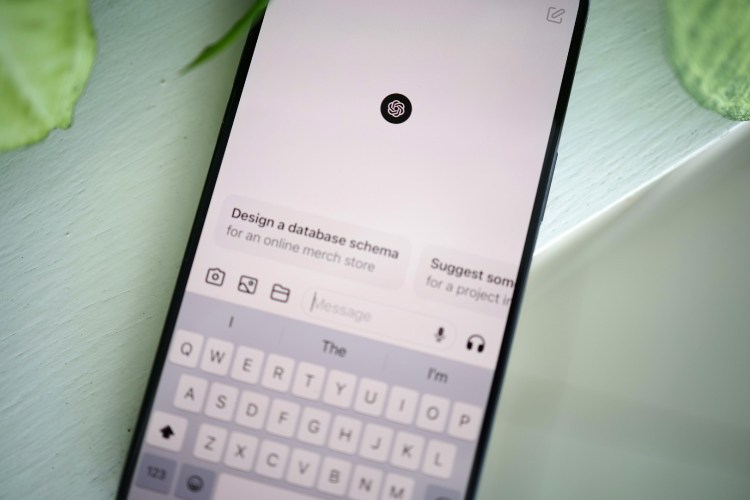

Earlier this year, social media fell head over heels for a photograph of Pope Francis walking outside in a very stylish, very puffy coat. There was only one problem with it: the actual Pope never wore the item in question. Instead, the image was a fake, created through machine learning and AI. And while articles were written debunking the image, it’s impossible to tell how effective they were; it’s entirely possible that there are people out there who still think that the Pope has a pristine down jacket on hand as he makes his way through Vatican City.

The Pope’s choice of outerwear is not likely to involve geopolitical conflict, however. Photographs of active war zones, on the other hand, can shape public policy and influence foreign policy. And if you’ve read this far, it’s probably not going to surprise you that some images purporting to be from Ukraine and Gaza are, in fact, the product of an algorithm and not a photojournalist’s lens.

As Will Oremus and Pranshu Verma reported for The Washington Post, advances in technology have made this phenomenon more prominent. The Post‘s reporting led one site that sells stock photos, Adobe Stock, to — as Oremus and Verma phrased it — “take new steps to prevent its images from being used in misleading ways.”

Given that Oremus and Verma found thousands of AI-generated images of both Gaza and Ukraine in Adobe Stock’s databases, companies that sell AI-generated news images have a big task ahead of them. Thankfully, as the duo writes of an AI-generated image of an explosion in Gaza, “there’s no indication that it or other AI stock images have gone viral or misled large numbers of people.” But it isn’t hard to imagine a point in the near future when one does.

Are Deepfakes Interfering With Taiwan’s Presidential Election?

Audio clips of Ko Wen-je may not be the genuine articleIt’s important to keep an eye on where images are from and to know what to look for in terms of signs that an image was generated using an algorithm. Earlier this year, Snopes published a good guide to debunking AI-generated news photos, including some tips on what to look for. Inconsistencies in human hands remain a good clue, though AI is apparently getting better at that as well. It’s an unnerving thing to consider — and it’s unlikely to become less prominent in the coming years.

This article appeared in an InsideHook newsletter. Sign up for free to get more on travel, wellness, style, drinking, and culture.