Editor’s Note: RealClearLife, a news and lifestyle publisher, is now a part of InsideHook. Together, we’ll be covering current events, pop culture, sports, travel, health and the world.

Bumble is swiping left on dick pics before their users ever have to.

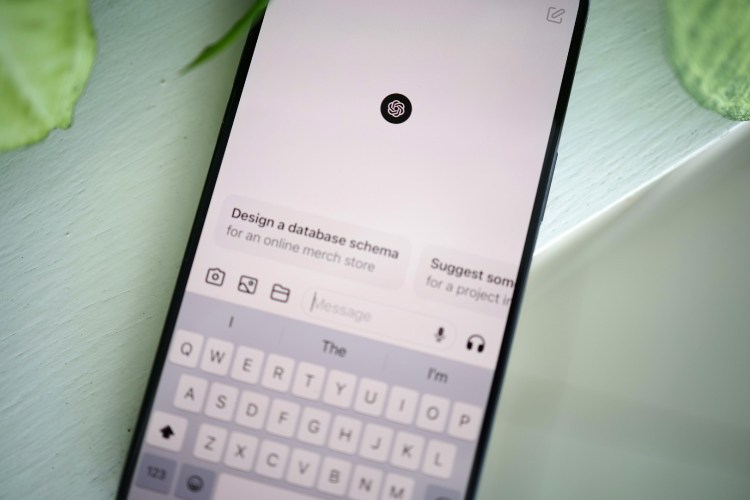

That’s thanks to the dating app’s new “Private Detector” technology, which it claims can identify a sexually explicit photo with 98 percent accuracy. Bumble’s London- and Austin-based parent company, which also owns dating apps Badoo, Chappy, and Lumen, announced Wednesday that all four apps will have the new tech integrated into their services beginning in June.

The feature is algorithmic, Inc. reported, and has been trained by artificial intelligence to capture images in real-time and determine if they’re sexually explicit, contain nudity or other sexual content. The image will be stopped dead in its tracks and will not upload onto a user’s device unless the opt into taking a peek.

“We can detect anything: guns, apples, snakes, you name it,” Andrey Andreev, the founder of the dating apps’ parent company — known internally as “The Group” — said.

“Andrey and I decided together that we want to use the power of technology and scale to do good things, keep people safe, and make us more accountable,” Bumble founder and CEO Whitney Wolfe Herd added.

And don’t worry about discrimination — the tech is designed to identify any instance of nudity, regardless of gender.

Thanks for reading InsideHook. Sign up for our daily newsletter and be in the know.